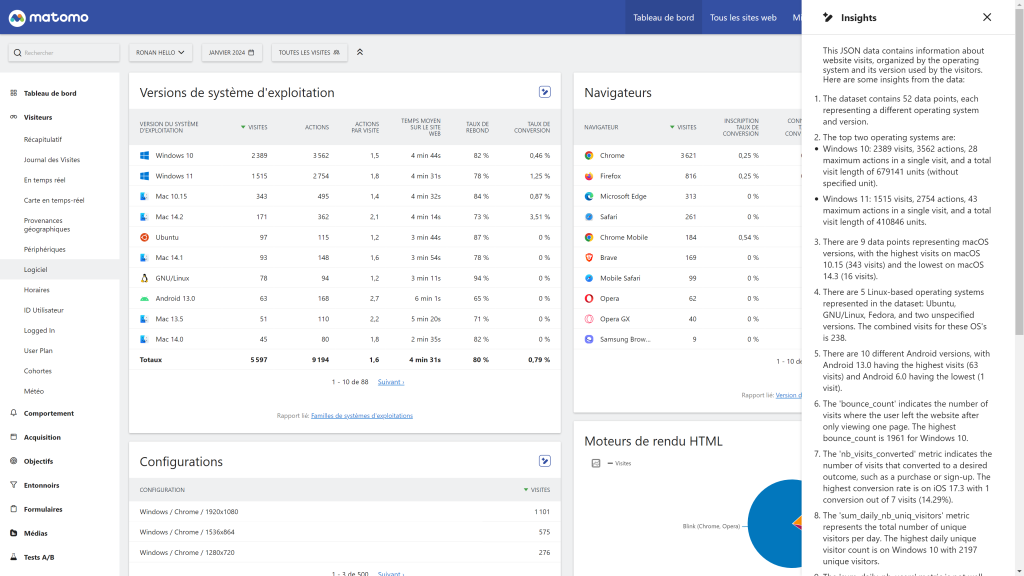

AI-Powered Report Insights

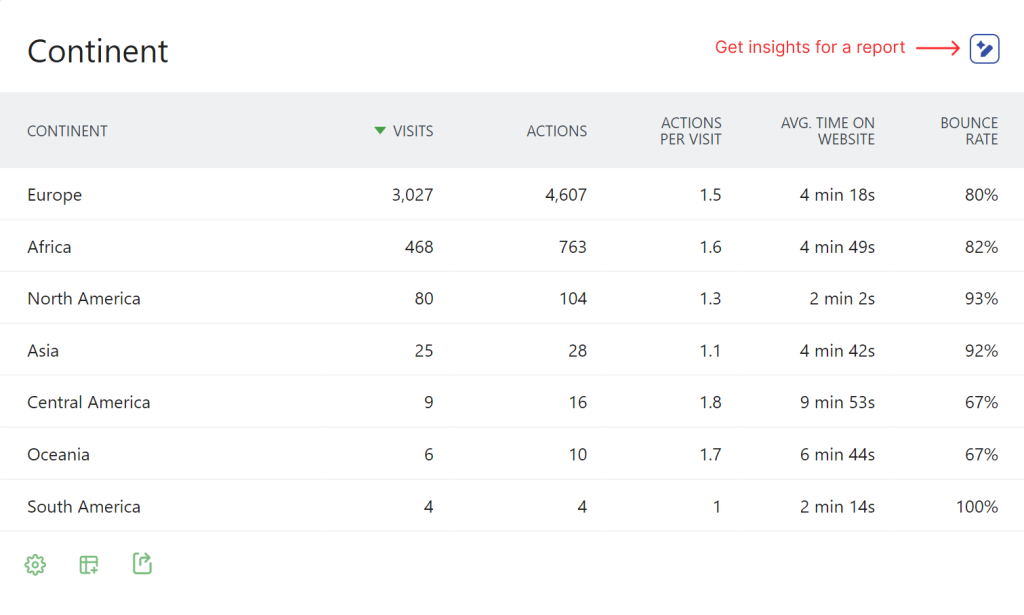

Get instant AI-generated insights for any Matomo report. The plugin adds an "Insights" button to all report widgets that analyzes your data and provides actionable recommendations.

- Works with all report types (visitors, actions, referrers, goals, custom dimensions, custom reports, etc.)

- Supports data tables, evolution graphs, and series visualizations

- Conversation mode: ask follow-up questions about your report data

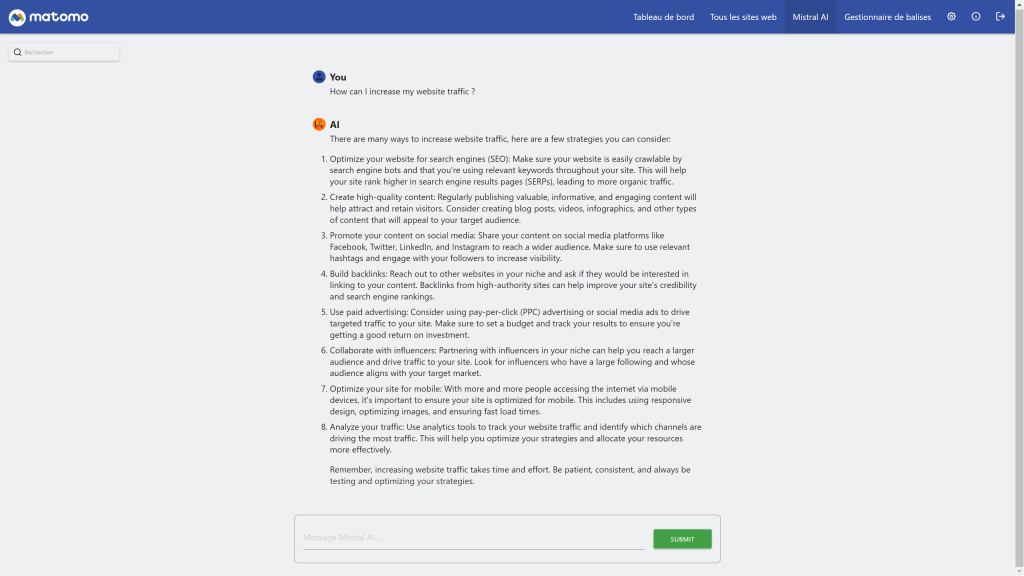

Dedicated AI Chat

A full-featured chat interface for asking questions about your analytics data.

- Accessible from the main menu under "MistralAI"

- Real-time streaming responses (with automatic fallback for unsupported servers)

Flexible Model Configuration

Choose from preset models or specify custom model names.

Preset Models: - Mistral Large - Mistral Medium - Mistral Small - Open Mistral Nemo - Codestral - Pixtral Large - Ministral 8B - Ministral 3B

Custom Models: Specify any model name to use models not in the preset list, perfect for: - New Mistral models - Self-hosted LLMs (LLaMA, Mistral, etc.) - Other MistralAI-compatible providers

Multi-Site Configuration

Configure different AI settings per website using Measurable Settings: - Override system-wide host, API key, and model per site - Customize prompts for specific websites - Leave empty to use system defaults

Custom Host Support

Connect to any MistralAI-compatible API endpoint: - MistralAI (default) - Azure MistralAI - Self-hosted solutions (Ollama, LocalAI, vLLM, etc.) - Other providers (Anthropic via proxy, Mistral, etc.)

Note: API key is optional when using custom hosts, making it easy to connect to local LLM instances.

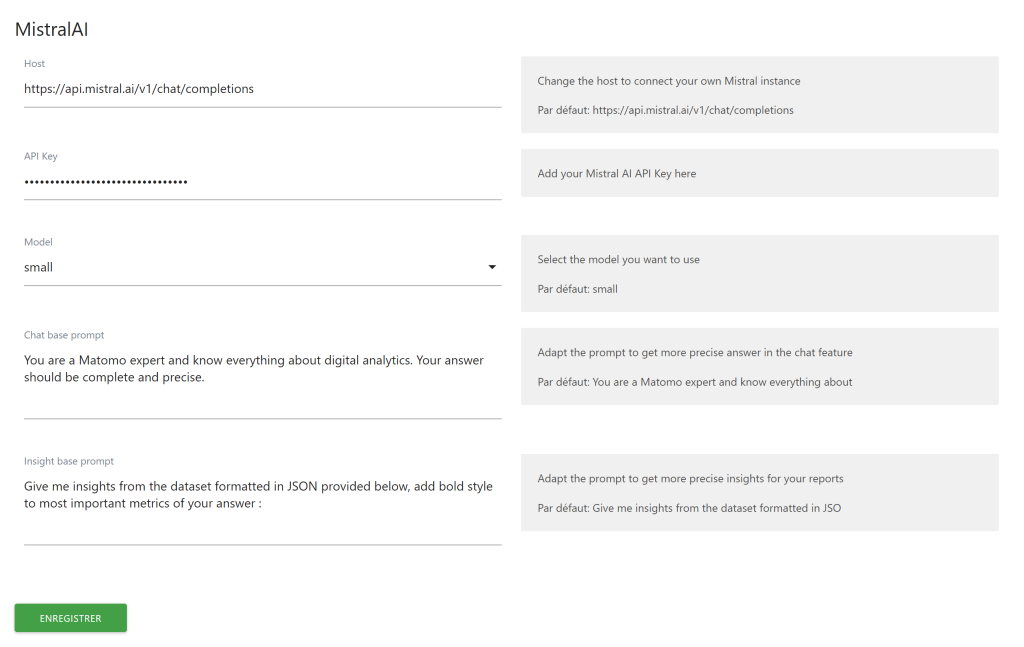

Customizable Prompts

Tailor the AI's behavior with custom prompts: - Chat Base Prompt: Customize how the AI responds in conversations - Insight Base Prompt: Customize how the AI analyzes report data

Multi-Language Support

Full translations available in: - English - German (Deutsch) - Spanish (Espaol) - French (Franais) - Italian (Italiano) - Dutch (Nederlands) - Swedish (Svenska)

Integrate AI-powered analytics insights and chat functionality into your Matomo instance using MistralAI or any MistralAI-compatible API.

Features

AI-Powered Report Insights

Get instant AI-generated insights for any Matomo report. The plugin adds an "Insights" button to all report widgets that analyzes your data and provides actionable recommendations.

- Works with all report types (visitors, actions, referrers, goals, custom dimensions, custom reports, etc.)

- Supports data tables, evolution graphs, and series visualizations

- Conversation mode: ask follow-up questions about your report data

Dedicated AI Chat

A full-featured chat interface for asking questions about your analytics data.

- Accessible from the main menu under "MistralAI"

- Real-time streaming responses (with automatic fallback for unsupported servers)

Flexible Model Configuration

Choose from preset models or specify custom model names:

Preset Models: - Mistral Large - Mistral Medium - Mistral Small - Open Mistral Nemo - Codestral - Pixtral Large - Ministral 8B - Ministral 3B

Custom Models: Specify any model name to use models not in the preset list, perfect for new MistralAI models, self-hosted LLMs, or other providers.

Custom Host Support

Connect to any MistralAI-compatible API endpoint: - MistralAI (default) - Azure MistralAI - Self-hosted solutions (Ollama, LocalAI, vLLM, etc.) - Other providers (Anthropic via proxy, Mistral, etc.)

Installation

From Marketplace

- Go to the Administration panel as a super user

- Navigate to the Marketplace section and select "Plugins"

- Search for "MistralAI"

- Install and activate the plugin

Manual Installation

- Download the plugin from GitHub

- Extract to your

/pluginsfolder - Activate the plugin in Matomo's Plugin settings

Configuration

System Settings (Global)

Navigate to Administration > General Settings > MistralAI to configure:

Setting Description Host API endpoint URL. Default:https://api.openai.com/v1/chat/completions

API Key

Your MistralAI API key (required for MistralAI, optional for custom hosts)

Model (Preset)

Select from available model presets

Model (Custom)

Override preset with a custom model name

Chat Base Prompt

System prompt for chat conversations

Insight Base Prompt

System prompt for report insights

Measurable Settings (Per-Site)

All system settings can be overridden per website in the site's Measurable Settings. Leave fields empty to use system defaults.

This is useful for: - Using different models for different sites - Customizing prompts for specific website contexts - Using separate API keys per site

Usage

Getting Report Insights

- Navigate to any report in Matomo

- Click the "Insights" button (sparkle icon) in the report header

- View AI-generated insights in the side panel

- Ask follow-up questions to dive deeper into the data

Using the Chat

- Go to MistralAI in the main menu

- Type your question about analytics

- Receive AI-powered responses with streaming support

- Continue the conversation with follow-up questions

API Reference

The plugin provides the following API methods:

Method DescriptionMistralAI.getResponse

Get AI response for messages (non-streaming)

MistralAI.getStreamingResponse

Get AI response with SSE streaming

MistralAI.getInsight

Get AI insights for report data

MistralAI.getModels

Get list of available preset models

Parameters

MistralAI.getResponse

- idSite - Site ID

- period - Period (day, week, month, year)

- date - Date string

- messages - Conversation messages in MistralAI format

MistralAI.getInsight

- idSite - Site ID

- period - Period

- date - Date string

- reportId - Report identifier

- messages - Conversation messages

All API methods require appropriate view permissions for the requested site.

Multi-Language Support

The plugin interface is available in: - English - German (Deutsch) - Spanish (Español) - French (Français) - Italian (Italiano) - Dutch (Nederlands) - Swedish (Svenska)

Requirements

- Matomo 5.0.0 or higher

- PHP 7.4 or higher

- Valid API key (for MistralAI) or accessible custom host

Support

- Issues: GitHub Issues

- Documentation: Plugin Homepage

- Email: ronan@openmost.io

How do I install this plugin?

This plugin is available in the official Matomo Marketplace:

- Go to the Administration panel

- Navigate to the Marketplace section and select "Plugins"

- Search for "MistralAI"

- Install and activate the plugin

- Configure your API settings in Administration > General Settings > MistralAI

Alternatively, download the plugin from GitHub and extract it to your /plugins folder.

What do I need to make it work?

You need an MistralAI API key, which you can obtain at https://docs.mistral.ai/api. If you're using a custom host (like a self-hosted LLM), an API key may be optional.

Can I use models other than MistralAI's?

Yes! The plugin supports any MistralAI-compatible API endpoint. You can connect to:

- Azure MistralAI

- Self-hosted solutions (Ollama, LocalAI, vLLM)

- Other providers (Mistral, Anthropic via proxy, etc.)

Simply configure the custom host URL in the plugin settings.

Which models are supported?

The plugin includes presets for:

- Mistral Large

- Mistral Medium

- Mistral Small

- Open Mistral Nemo

- Codestral

- Pixtral Large

- Ministral 8B

- Ministral 3B

You can also specify any custom model name for models not in the preset list.

Is the plugin available to all users in my Matomo instance?

Yes, once activated, all users with view permissions can access the AI features for their permitted sites.

Can I configure different settings per website?

Yes! Use Measurable Settings to override the system-wide host, API key, model, and prompts for specific websites. Leave fields empty to use system defaults.

How do I get insights for a report?

- Navigate to any report in Matomo

- Click the "Insights" button (AI icon) in the report header

- View AI-generated insights in the side panel

- Ask follow-up questions to dive deeper into the data

Does the plugin support streaming responses?

Yes, real-time streaming responses are supported. The plugin automatically falls back to non-streaming mode if your server doesn't support Server-Sent Events (SSE).

Can I customize the AI's behavior?

Yes, you can customize:

- Chat Base Prompt: Controls how the AI responds in chat conversations

- Insight Base Prompt: Controls how the AI analyzes report data

These can be set globally or per website.

What languages are supported?

The plugin interface is translated into:

- English

- German (Deutsch)

- Spanish (Español)

- French (Français)

- Italian (Italiano)

- Dutch (Nederlands)

- Swedish (Svenska)

What are the requirements?

- Matomo 5.0.0 or higher

- PHP 7.4 or higher

- Valid API key (for MistralAI) or accessible custom host

Is my data sent to MistralAI?

When you use the Insights feature or Chat, the relevant report data and your messages are sent to the configured API endpoint (MistralAI by default). If you have data privacy concerns, consider using a self-hosted LLM solution.

How can I contribute to this plugin?

You can contribute by:

- Reporting issues on GitHub

- Forking the project and submitting pull requests

- Contacting the developer at ronan@openmost.io

How long will this plugin be maintained?

The plugin is actively maintained. The developer uses Matomo on many projects and will continue to patch and improve the plugin.